Artificial intelligence is everywhere these days. It’s making medical predictions, detecting fraud, filtering job applications, and powering the chatbots we interact with daily. But here’s the uncomfortable truth: most AI systems aren’t built with security in mind. They can be tricked, poisoned, or misused in ways that traditional cybersecurity tools don’t even begin to cover.

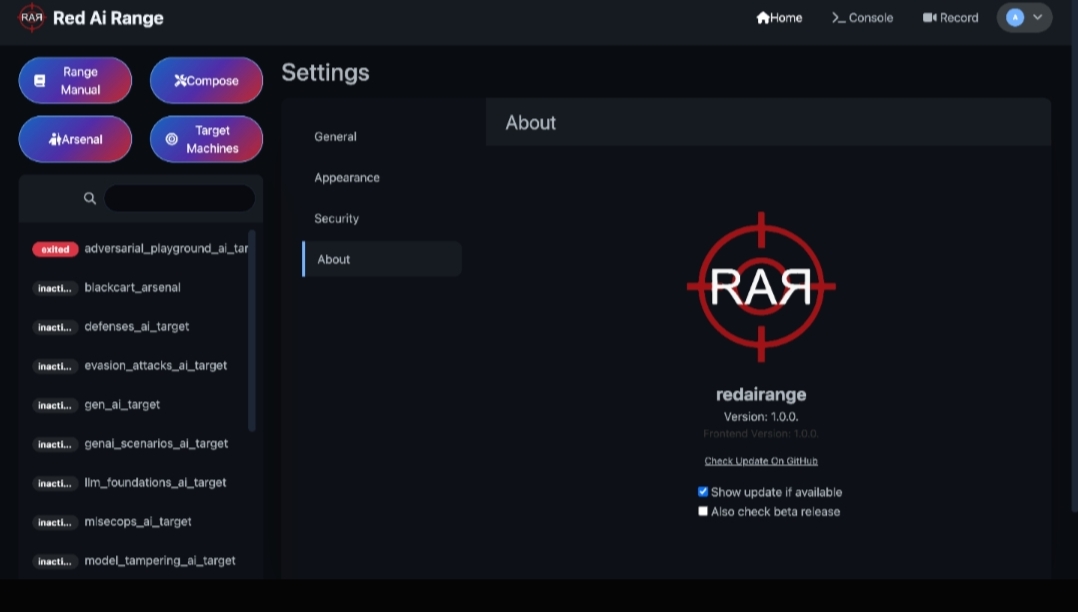

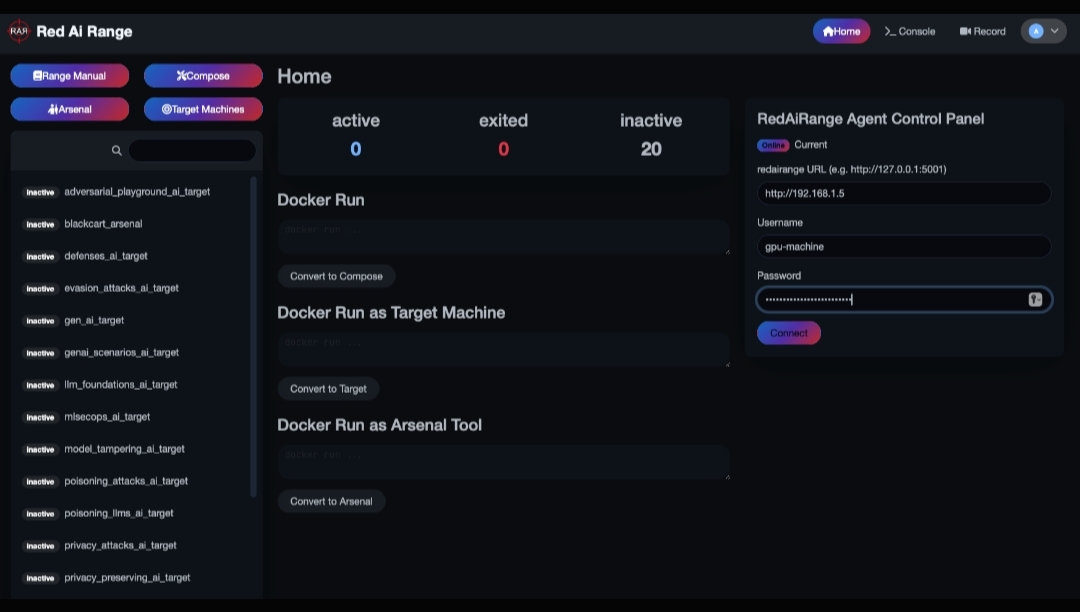

That gap is exactly what the new Red AI Range (RAR) is trying to close. It’s an open-source platform built to let security teams stress test AI models the same way we’ve been red teaming networks and applications for years. Think of it as a crash test facility for machine learning systems. Instead of waiting for attackers to figure out how to exploit your AI, RAR lets you run those scenarios yourself—on your own terms.

Why AI Needs Red Teaming in the First Place

If you’ve been in security for a while, you know how this goes. Every new technology boom—cloud, mobile, IoT—comes with a wave of “we’ll worry about security later.” AI is no different. The difference here is that AI’s weaknesses don’t always look like traditional vulnerabilities.

Here are a few examples that have already shown up in the wild:

- Researchers have fooled image-recognition systems into mislabeling objects just by adding tiny, almost invisible noise to the input.

- Chatbots have been tricked into bypassing safety filters with carefully worded prompts—what’s now called prompt injection.

- Attackers have poisoned training datasets so that fraud-detection models start letting malicious activity slip through.

- In some cases, models have leaked sensitive training data just by being queried in the right way.

These aren’t bugs in the code. They’re flaws in how machine learning itself works. And if you’re deploying AI at scale, you can’t just cross your fingers and hope no one figures them out.

What Exactly Is Red AI Range?

So what does RAR actually do? At its core, it’s a testing environment for AI security. Security teams can spin up containerized labs where they run attack simulations against their own AI systems. Instead of theorizing about how an adversarial attack might play out, you get to see it in action.

A few things that stand out about the tool:

- Ready-to-use attack modules – You don’t need to build everything from scratch. RAR comes with built-in scenarios for adversarial examples, model poisoning, LLM prompt injection, and more.

- Automated pipelines – It isn’t just a one-off test. RAR can integrate into your DevSecOps process so every new model build is automatically put through its paces.

- Flexibility – Since it’s open source, teams can tweak it, add their own scenarios, or adapt it to very specific AI environments.

- Focus on the real world – It’s not just about theoretical vulnerabilities. The goal is to simulate attacks the way they’d actually unfold in production.

In other words, it’s red teaming, but built for the quirks of AI instead of just web apps or networks.

Why This Matters for Security Teams

Let’s be honest: most organizations adopting AI today don’t have in-house experts in adversarial machine learning. That makes RAR valuable because it lowers the barrier to entry. You don’t need a PhD in data science to understand how your fraud-detection model or chatbot could be misused—you just run the scenarios.

Some practical wins this gives you:

- Catch weaknesses before attackers do. Whether it’s a model that can be evaded or a deployment pipeline with sloppy defaults, RAR helps you spot it early.

- Build tougher models. By testing against real attacks, data scientists and engineers can retrain or redesign systems with security in mind.

- Stay ahead of regulations. Governments are starting to push AI accountability hard. Being able to show you’re red teaming your models could be a big compliance advantage.

- Educate your team. Nothing drives home a vulnerability like watching your “smart” AI completely fail because of a few manipulated inputs.

Where You’d Actually Use This

It’s easy to talk about AI security in the abstract, but let’s ground it. Here are a few places RAR could make a difference today:

- Hospitals and clinics – Making sure diagnostic AI systems don’t get thrown off by corrupted images or poisoned data.

- Banks and fintech – Testing fraud-detection AI against adversarial strategies designed to slip past filters.

- Autonomous vehicles – Checking whether camera-based AI can be tricked by altered road signs.

- Generative AI apps – Hardening LLMs against prompt injections that try to force them into unsafe responses.

- Cloud-based AI services – Validating that deployment and scaling pipelines aren’t introducing security holes.

Basically, if AI is running something important in your organization, you need to know how it behaves under attack.

Looking Ahead

Right now, AI red teaming is still new territory. Attack techniques are evolving, and defenders are scrambling to keep up. The reality is, tools like Red AI Range won’t solve everything—but they move the needle in the right direction.

Expect the platform to grow quickly, especially since it’s open source. More contributors means more attack modules, more integrations, and more creative ways to break (and then fix) AI systems. Over time, we’ll probably see RAR or similar tools become as standard in AI pipelines as penetration testing is for web apps today.

Final Thoughts

The takeaway is simple: if you’re deploying AI, you can’t ignore its unique security risks. Traditional firewalls and scanners won’t save you when your chatbot starts leaking sensitive data or your fraud-detection model gets manipulated.

Red AI Range gives teams a way to take control of that problem. It’s not about fear—it’s about preparation. The same way we wouldn’t roll out a new web app without pen-testing it, we shouldn’t roll out AI systems without putting them through adversarial stress tests.

The attackers are already experimenting. With tools l Like RAR, defenders finally have a way to experiment too—before it’s too late

2 Comments

Simply wish to say your article is as amazing The clearness in your post is just nice and i could assume youre an expert on this subject Well with your permission let me to grab your feed to keep updated with forthcoming post Thanks a million and please carry on the gratifying work

Keep updating knowledge in cyber Security