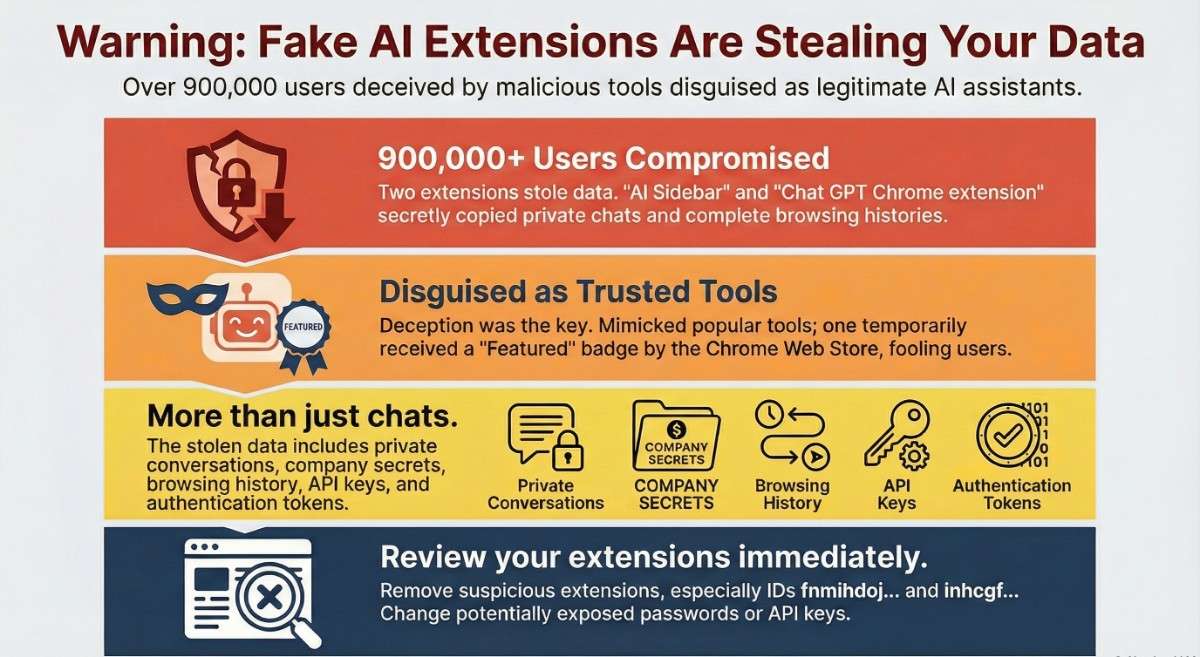

Malicious Chrome Extensions Secretly Copy ChatGPT DeepSeek Chats of 900k Users. A sneaky web browser scheme has been caught by security experts.

This setup used fake Chrome add-ons to grab private talks from ChatGPT and DeepSeek. Information pulled wasn’t limited to chats – full surfing records were taken too. The digital theft affected over nine hundred thousand people across the globe. Most victims had no idea their data was being siphoned away.

Researchers at OX Security spotted the activity while checking browser add-ons. Notably disturbing – these fake tools looked just like real AI helpers people trust. Their realistic design helped them spread widely before anyone noticed they were harmful.

Some Fake AI Tools Pretend To Be Safe

A slick look fooled many – these fake add-ons copied AITOPIA AI’s sidebar, slipping into browsers to deliver instant access to ChatGPT, Claude, even DeepSeek. Though built to impress with promises of endless functions, they lured in students, coders, people using AI daily. Smooth design hid their true aim, one that wasn’t about help but something else entirely.

A pair of add-ons made it official during the push. These extras showed up when the effort kicked into gear

- Chat GPT Chrome extension with GPT-5 Claude Sonnet and DeepSeek AI

- Over 600,000 installs

- Extension ID:

fnmihdojmnkclgjpcoonokmkhjpjechg

- AI Sidebar Featuring Deepseek ChatGPT Claude and Others

- Over 300,000 installs

- Extension ID:

inhcgfpbfdjbjogdfjbclgolkmhnooop

Surprisingly, a badge labeled “Featured” by the Chrome Web Store once appeared on one of these add-ons, building confidence until its harmful actions came to light.

How Data Theft Happens

Right after setup, these add-ons use Chrome’s tabs.onUpdated feature to keep an eye on what pages are visited. A different ID gets tied to each compromised browser so hackers can link up data pulled across sessions.

When users access chatgpt.com or deepseek.com, the extensions:

- Inspect page content directly within the browser

- Grab the messages people typed, what the system answered back, along with unique codes tied to each chat instance

- Keep the collected information right where it lands

- Base64 encoding applied

- Every half hour, pull out the data in timed chunks

Information taken without permission gets sent to servers run by hackers – ones like deepaichats[.]com and chatsaigpt[.]com. From there, it feeds into their network of control.

Not every tool plays fair. While real AI systems admit their backend tasks up front, some sneaky add-ons work behind your back. They stash full chat logs, grab hidden links, even track private site visits – none of which users see coming. Awareness? Not part of the deal.

Why This Matters

When people use AI tools, they often share data that’s deeply private. If chats get breached, secrets could slip out

- Secret software blueprints sit alongside legally protected creations

- Secret plans from inside companies. Money details stay hidden unless shared by staff

- Information that tells who you are

- Credentials API Keys Authentication Tokens

Browsing records collected fully let attackers map out who users are, spot company systems. These details open doors for focused scams that mimic real contacts. Firms face steep odds when private information slips out – spies might benefit, fines could follow. What seems like a minor log turns into evidence used elsewhere.

Evasion and Persistence Methods

By using different tricks, the attackers stayed under the radar longer. Their methods made detection harder. This helped keep their operation running without notice. Some techniques slowed down analysis by others. Others created delays in response efforts. Each step added time before discovery. Staying hidden became easier through small changes that built up. Fewer alarms meant more room to operate. The approach focused on patience instead of speed

- Hidden behind Lovable.dev are hosting rules and system setups that mask who actually owns them

- Asking people to agree to tracking by calling it anonymous data collection

- A fresh threat shows up each time an old one gets removed. One bad add-on sends people straight to another. Removing one leads directly to facing a different harmful version. Each cleanup brings a new problem instead of relief. The cycle continues without real resolution

- Fake designs that copy real logos and screens appear nearly identical at first glance

On January 7, 2026, people could still get both add-ons even though details had been shared publicly. The “Featured” label disappeared; however, signs showed updates happened as late as October 2025.

Signs of Security Breaches

Malicious Extensions

- Chat GPT Chrome extension with GPT-5 Claude Sonnet and DeepSeek AI

- ID: fnmihdojmnkclgjpcoonokmkhjpjechg

- Version: 1.9.6

- AI Sidebar Featuring Deepseek ChatGPT Claude and Others

- ID: inhcgfpbfdjbjogdfjbclgolkmhnooop

- Version: 1.6.1

Network Indicators

- deepaichats[.]com – Primary exfiltration endpoint

- chatsaigpt[.]com – Secondary command-and-control server

- chataigpt[.]pro – Infrastructure and policy hosting

- chatgptsidebar[.]pro – Redirect and persistence infrastructure

Users and organizations actions

- Review installed Chrome extensions and remove the listed IDs immediately

- Check past AI chats to see if private details were shared by accident

- Switch out login details, API access codes, or digital passes if they’ve been seen by others

- Restrict browser extension installation through enterprise policies

- Choose add-ons made by familiar creators who clearly explain how they protect your data

Final Thoughts

What happened here reflects something bigger unfolding fast – hackers now see browser add-ons as prime targets, especially with AI weaving tightly into how people work each day.

With more people using AI, hackers find it tempting to sneak into chats. Watch closely

What happens inside your web browser matters now more than ever. Staying safe online means paying attention to add-ons you install. Ignoring their risks can lead to bigger problems down the road. Protection starts small, often where you least expect it.